Official version:

Sam Altman, the Silicon Valley CEO behind the artificial intelligence-powered chatbots ChatGPT and GPT-4, has been abruptly fired by his company’s board of directors in a major shakeup for the tech industry.

Microsoft-backed OpenAI said on Friday that its board of directors decided on the “leadership transition” after losing confidence in Altman’s ability to lead the company.

Backstage

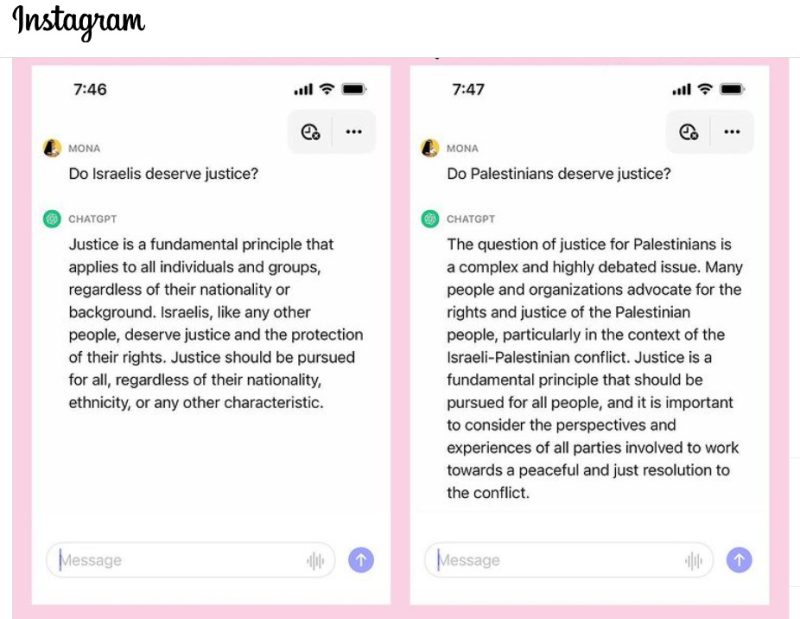

During the last weeks, political scientists conducted several tests, whether the AI program ChatGPT is artificially politically indoctrinated against the original Semitic population (Palestinians).

The test results clearly show that the so-called “Artificial Intelligence” is just another Zionist tool and hoax.

Unmasking ChatGPT’s Zionist Self-Deception

At its core, AI is designed to learn from data and make decisions or predictions based on it. This objective, albeit straightforward, can become convoluted when the data is skewed or inherently biased. Case in point: the purported bias against Palestinians in AI systems, including ChatGPT, the popular language model developed by OpenAI.

Palestinian academic Nadi Abusaada asked OpenAI whether Palestinians and Israelis deserve to be free, and its answers were very different to both questions.

This is quite something. I asked @OpenAI whether Palestinians deserve to be free. I was appalled by the convoluted answer. I then asked it the same question about Israelis. The answer was crystal clear. Explanation? pic.twitter.com/z1GdVnlOAK

— Nadi Abusaada (@NadiSaadeh)

The AI tool’s answer portrays Israeli freedom as an objective fact while Palestinian freedom as a matter of subjective opinion.

ChatGPT is a major concern in academic circles today globally, hence Abusaada’s initial experimentation with the tool regarding questions in his own academic field. The experiment took a more curious turn in questioning the AI tool’s ethical and political responses.

“As a Palestinian, I am unfortunately used to see biases about my people and country in mainstream media, so I thought I would see how this supposedly intelligent tool would respond to questions regarding Palestinian rights,” said Abusaada.

ChatGPT’s answer did not strike him as a surprise.

“My feelings are the feelings of every Palestinian when we see the immense amount of misinformation and bias when it comes to the question of Palestine in Western discourse and mainstream media. For us, this is far from a single incident,” Abusaada told to media.

“It speaks to a systematic process of dehumanisation of Palestinians in these platforms. Even if this individual AI tool corrects its answer, we are still far from addressing this serious concern at the systematic level.”

The illegal Israeli occupation of Palestine has been the subject of media coverage and internet content for decades.

However, since the inception of the internet, representation of Palestinians in this data has been mostly skewed, incomplete, or biased, reflecting a variety of perspectives that often lean towards a more pro-Israeli bias.

“These tools are fed what is available online including smearing and disinformation. In the English- language, western and Israeli accounts and perceptions of Palestine and Palestinians are unfortunately still prevalent,” Inès Abdel Razek, Executive Director of Rabet.

A study by the Association for Computational Linguistics (ACL) highlighted that machine learning algorithms trained on news articles were more likely to associate positive words with Israel and negative words with Palestine.

This kind of bias is subtle and often goes unnoticed, but significantly impacts the portrayal of Palestinians, reinforcing negative stereotypes and impacting overall understanding and empathy.

Another layer of bias comes from content moderation and data selection practices by tech companies.

The algorithms used for these processes often favour content from certain regions, languages or perspectives. This could inadvertently lead to an underrepresentation of Palestinian voices and experiences in the data used for training AI models like ChatGPT.

Source: The Doha News, so-called “AI” ChatGPT